论文资料

Pyramid Scene Parsing Network主页

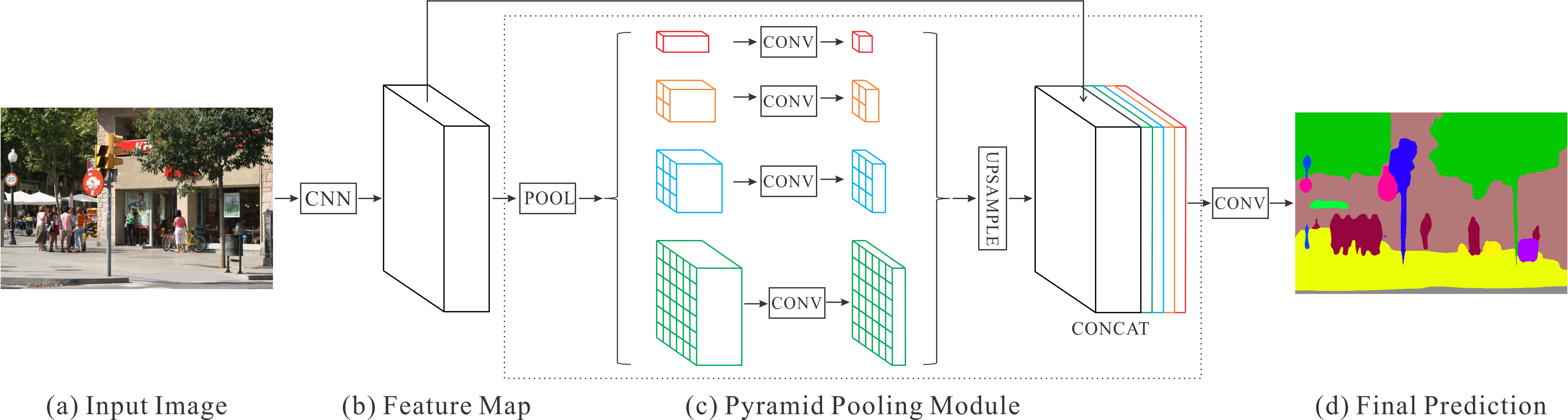

网络结构

结果示例

代码实现

pspnet pytorch实现的pspnet,同时提供了PASCAL VOC 2012和BRATS 2017数据集上训练的权值文件。

PSPNet-TF-Reproduce PSPNet的tensorflow实现。

代码分析

import torch

from torch import nn

from torch.nn import functional as F

import extractors

class PSPModule(nn.Module):

def __init__(self, features, out_features=1024, sizes=(1, 2, 3, 6)):

super().__init__()

# PSP池化模块,尺度为1,2,3,6分别为分辨率降低1,2,3,6

self.stages = []

self.stages = nn.ModuleList([self._make_stage(features, size) for size in sizes])

# 将concat所有的特征层,包括4种尺度的PSP以及原先的输入

self.bottleneck = nn.Conv2d(features * (len(sizes) + 1), out_features, kernel_size=1)

self.relu = nn.ReLU()

def _make_stage(self, features, size):

prior = nn.AdaptiveAvgPool2d(output_size=(size, size))

conv = nn.Conv2d(features, features, kernel_size=1, bias=False)

return nn.Sequential(prior, conv)

def forward(self, feats):

# 输入的特征层为BxCxHxW

h, w = feats.size(2), feats.size(3)

# 在PSP池化模块concat前首先使用双线性插值的方法上采样为原先的尺度

priors = [F.upsample(input=stage(feats), size=(h, w), mode='bilinear') for stage in self.stages] + [feats]

bottle = self.bottleneck(torch.cat(priors, 1))

return self.relu(bottle)

class PSPUpsample(nn.Module):

def __init__(self, in_channels, out_channels):

super().__init__()

self.conv = nn.Sequential(

nn.Conv2d(in_channels, out_channels, 3, padding=1),

nn.BatchNorm2d(out_channels),

nn.PReLU()

)

def forward(self, x):

h, w = 2 * x.size(2), 2 * x.size(3)

# 卷积上采样层2x并且使用一个1x的conv

p = F.upsample(input=x, size=(h, w), mode='bilinear')

return self.conv(p)

class PSPNet(nn.Module):

def __init__(self, n_classes=18, sizes=(1, 2, 3, 6), psp_size=2048, deep_features_size=1024, backend='resnet34',

pretrained=True):

super().__init__()

# PSPNet前端特征提取模块

self.feats = getattr(extractors, backend)(pretrained)

# 默认的psp_size为2048是因为resnet的特征层为2048

self.psp = PSPModule(psp_size, 1024, sizes)

self.drop_1 = nn.Dropout2d(p=0.3)

# 原文中直接使用8x的上采样,该实现中使用3个2x的上采样循序渐进

self.up_1 = PSPUpsample(1024, 256)

self.up_2 = PSPUpsample(256, 64)

self.up_3 = PSPUpsample(64, 64)

self.drop_2 = nn.Dropout2d(p=0.15)

# 最后一层将64层的特征层转换为n_classes的pixel label map

self.final = nn.Sequential(

nn.Conv2d(64, n_classes, kernel_size=1),

nn.LogSoftmax()

)

self.classifier = nn.Sequential(

nn.Linear(deep_features_size, 256),

nn.ReLU(),

nn.Linear(256, n_classes)

)

def forward(self, x):

# 或得resnet的第四层特征和第三层特征

f, class_f = self.feats(x)

p = self.psp(f)

p = self.drop_1(p)

p = self.up_1(p)

p = self.drop_2(p)

p = self.up_2(p)

p = self.drop_2(p)

p = self.up_3(p)

p = self.drop_2(p)

# 第三层特征作为辅助信息,其输出维度为1024,即deep_features_size,将class_f池化为BxCx1x1然后reshape为BxC

auxiliary = F.adaptive_max_pool2d(input=class_f, output_size=(1, 1)).view(-1, class_f.size(1))

# 返回的是feature map的Bx[n_classes]xHxW以及辅助的class信息Bx[n_classes]

return self.final(p), self.classifier(auxiliary)